More than 100 people have used an artificial intelligence (AI) assistant set up by a campaign against the federal government’s proposed misinformation law to write submission letters opposing the bill, in what appears to be a first for the technology.

Stop Aussie Censorship is a campaign created last month to oppose the Communications Legislation Amendment (Combatting Misinformation and Disinformation) Bill 2023 after an exposure draft of the law was published and a public consultation launched in June.

The group behind the campaign, Australian Democracy By Discourse, describes itself on its website as “a significantly-sized and diverse group of Australians from across the political spectrum who came together out of shared concern for this dangerous bill”.

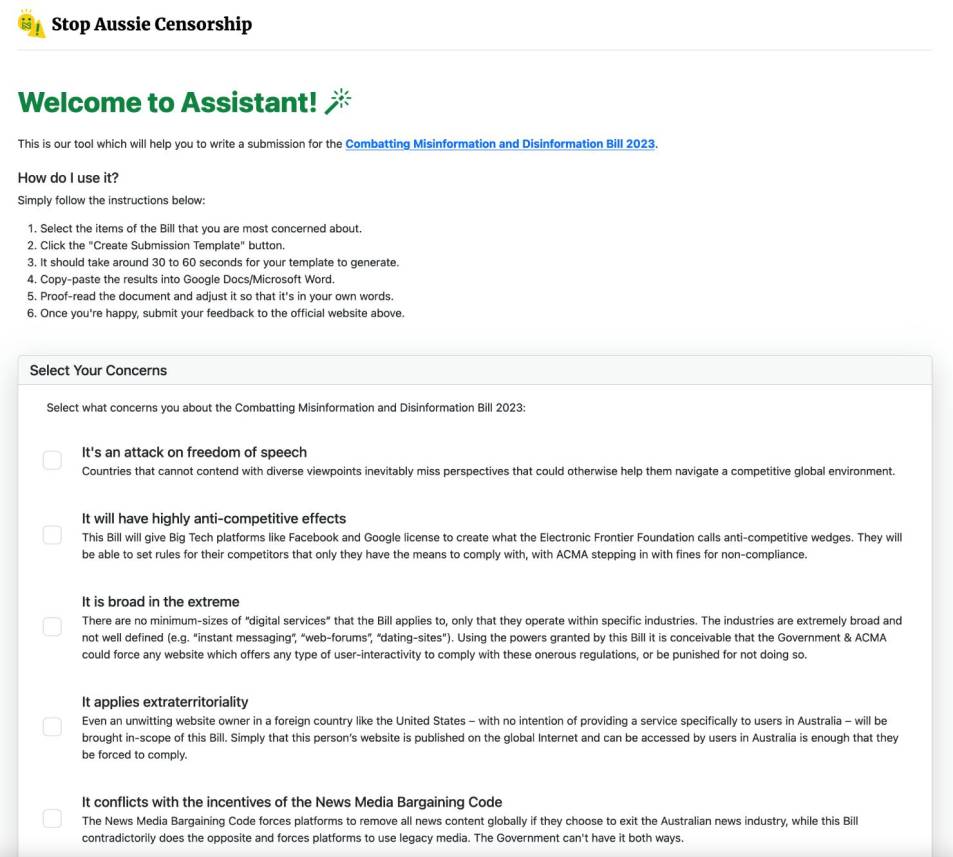

As part of its efforts to defeat the bill, the group has created a “submission assistant” on its website that generates a unique, customised letter using artificial intelligence that campaign supporters can submit to the public consultation process.

The tool lists 13 concerns — ranging from “it’s an attack on freedom of speech” to “it will destroy democracy” — that users can submit to inform the assistant’s output. It then produces a unique letter each time.

Crikey compared two letters produced by Stop Aussie Censorship’s submission assistant using the same selected concerns and found that they included slight variations in prose while sticking to the same structure and arguments.

Stop Aussie Censorship spokesperson Reuben Richardson said that the submission assistant tool was created because the campaign wanted to help supporters who might otherwise find it daunting to write a submission. He cited lack of experience, people who might have trouble writing due to learning disorders, and being time-poor as some of the obstacles that the AI tool could help overcome.

“In some cases, Australians simply don’t know how to get started voicing their concerns, which is extremely damaging for democracy,” he told Crikey.

Richardson said that he hopes that AI-generated submissions are not viewed as less authentic.

“To believe that a submission that has been generated using AI in part or in whole is less authentic, without understanding the individual circumstances of the submitter, appears to us to be a little presumptuous,” he said.

He noted that the campaign prefers supporters write their own submissions but also said that other popular campaign tactics are even less personal.

“We also feel that petitions and form letters are too easily ignored by lawmakers — simply reading one and throwing the rest in the trash can,” he said.

The emergence of accessible artificial intelligence language models that can almost instantaneously produce endless amounts of text has presented a new challenge to institutions that take submissions from the public. Online booksellers, science-fiction magazines and scientific journals have all had to contend with an influx of often low-quality and copyright-infringing AI-generated content.

Communications Minister Michelle Rowland declined to comment.

I love the idea that those writing to oppose the Disinformation Bill might have ‘learning disorders.’

This technology is an absolutely boon to the “flood the zone with s**t” types.

As someone who often supports (what I consider to be) worthy causes, I note that AI still manages to be hopelessly verbose. When using the templates the worthy organisations provide, I do my best to delete the verbose and insert my own plain speakin’. No abusive, libelous or strong language included of course.

But I do think if you want our elected representatives’ office staff to pay some regard to what you have to say, keep it succinct, keep it pithy.

I hope some of my worthy organisations are reading this!

Its not only them. I recently made a submission to a consultancy firm conducting a ‘consultation’ with residents about a proposed new mobile phone tower in a historic precinct. The submission (from a community group) took some time to put together. I received a lengthy response one working day later which seemed a little odd – but insistent that the points about heritage impacts in our submission were all groundless, and we had nothing at all to worry about. I ran it through an app to check whether it was AI-generated, the result was a 20% likelihood that it was.

It looks as though making written submissions is about to become even more pointless than now.

How does one tell, the AI is more articulate?

AI probably knows how to use an apostrophe.

No spelling, grammar or syntax errors.