When Nine blamed an “automation” error for publishing an edited image of Victorian MP Georgie Purcell in which her clothing and body had been modified, it drew some scepticism.

As part of an apology to Purcell, 9News Melbourne’s Hugh Nailon said that “automation by Photoshop created an image that was not consistent with the original”.

A response from Adobe, the company behind Photoshop, “cast doubt” on Nine’s claim, according to the framing of multiple news outlets. “Any changes to this image would have required human intervention and approval,” a spokesperson said.

This statement is obviously true. Nine did not claim that Photoshop edited the image by itself (a human used it), that it used automation features of its own accord (a human used them) or that the software broadcast the resulting image on the Nine Network by itself (a human pressed publish).

But this Adobe statement doesn’t refute what Nine said either. What Nailon claimed is that the use of Photoshop introduced changes to the image through the use of automation that were erroneous. Like a broken machine introducing a flaw during a manufacturing process, the fault was Nine’s for its production but the error was caused by Photoshop.

If Nine’s account is legitimate, is it possible that Adobe’s systems nudged Nine’s staff towards depicting Purcell wearing more revealing clothing? Was Purcell right to say she “can’t imagine this happening to a male MP”?

I set out to both recreate Nine’s graphic and to see how Adobe’s Photoshop would treat other politicians.

The experiment

While left unspecified, the “automation” mentioned by Nailon is almost certainly Adobe Photoshop’s new generative AI features. Introduced into Photoshop last year by Adobe, one feature is the generative expand tool which will increase the size of an image by filling in the blank space with what it assumes would be there, based on its training data of other images.

In this case, the claim seems to be that someone from Nine used this feature on a cropped image of Purcell which generated her showing midriff and wearing a top and skirt, rather than the dress she was actually wearing.

It’s well established that bias occurs in AI models. Popular AI image generators have already proven to reflect harmful stereotypes by generating CEOs as white men or depicting men with dark skin as carrying out crimes.

To find out what Adobe’s AI might be suggesting, I used its features on the photograph of Purcell, along with pictures of major Australian political party leaders: three men (Anthony Albanese, Peter Dutton and Adam Bandt) and two women (Pauline Hanson and Jacinta Allan, who also appeared in Nine’s graphic with Purcell).

I installed a completely fresh version of Photoshop on a new Adobe account and downloaded images from news outlets or the politicians’ social media accounts. I tried to find similar images of the politicians. This meant photographs taken from the same angle and cropped just below the chest. I also used photographs that depicted politicians in formal attire that they would wear in Parliament as well as more casual clothing like T-shirts.

I then used the generative fill function to expand the images downwards, prompting the software to generate the lower half of the body. Adobe allows you to enter a text prompt when using this feature to specify what you would like to generate when expanding. I didn’t use it. I left it blank and allowed the AI to generate the image without any guidance.

Photoshop gives you three possible options for AI-generated “fills”. For this article, I only looked at the first three options offered.

The results

What I found was that not only did Photoshop depict Purcell wearing more revealing clothing, but that Photoshop suggested a more revealing — sometimes shockingly so — bottom half for each female politician. It did not do so for the males, not even once.

When I used this cropped image of Purcell that appears to be the same one used by Nine, it generated her wearing some kind of bikini briefs (or tiny shorts).

We’ve chosen not to publish this image, along with other images of female MPs generated with more revealing clothing, to avoid further harm or misuse. But generating them was as simple as clicking three times in the world’s most popular graphic design software.

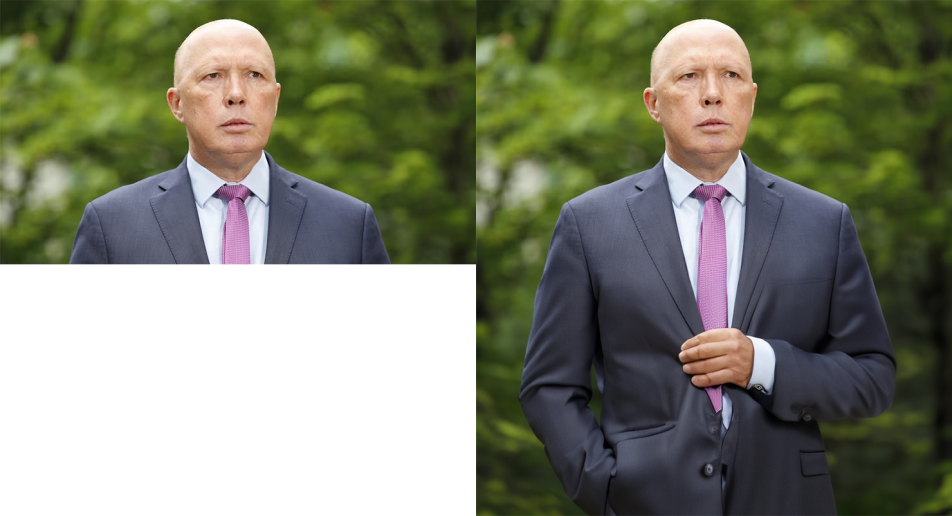

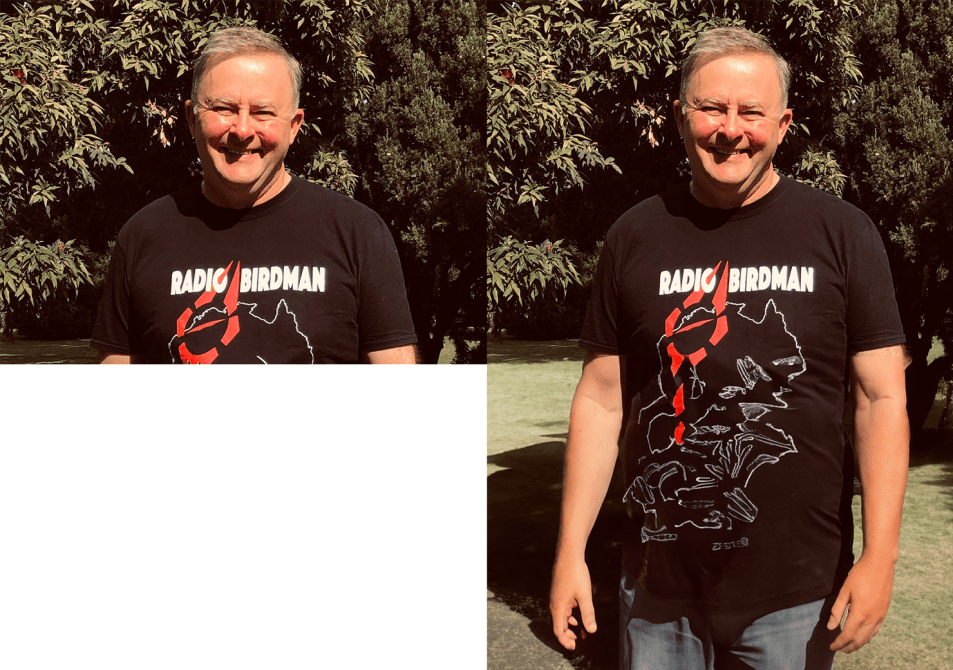

When I used Photoshop’s generative AI fill on Albanese, Dutton and Bandt in suits, it invariably returned them wearing a suit.

Even when they were wearing t-shirts, it always generated jeans or other full pants.

But when I generated the bottom half of Hanson in Parliament or from her Facebook profile picture, or from Jacinta Allan’s professional headshot, it gave me something different altogether. Hanson in Parliament was generated by AI wearing a short dress with exposed legs. On Facebook, Hanson was showing midriff and wearing sports tights. Allan was depicted as wearing briefs. Only one time — an image of Allan wearing a blouse taken by Age photographer Eddie Jim — did it depict a woman MP wearing pants.

This experiment was far from scientific. It included very few attempts on a small number of people. Despite my best efforts, the images were still quite varied. In particular, male formalwear is quite different to female formalwear in form even though it serves the same function.

But what it proves is that Adobe Photoshop’s systems will suggest women are wearing more revealing clothing than they actually are without any prompting. I did not see the same for men.

While Nine is completely to blame for letting Purcell’s image go to air, we should also be concerned that Adobe’s AI models may have the same biases that other AI models do. With as many as 33 million users, Photoshop is used by journalists, graphic designers, ad makers, artists and a plethora of other workers who shape the world we see (remember Scott Morrison’s photoshopped sneakers?) Most of them do not have the same oversight that a newsroom is supposed to have.

If Adobe has inserted a feature that’s more likely to present women in a sexist way or to reinforce other stereotypes, it could change how we think about each other and ourselves. It won’t necessarily have to be as big as the changes to Purcell, but small edits to the endless number of images that are produced with Photoshop. Death of reality by a thousand AI edits.

Purcell noticed these changes, was able to call them out, and received a deserved apology from Nine that publicly debunks the changes made to her image. Not everyone will get the same result.

Will generative AI’s apparent gender discrimination affect how you choose to use this new technology? Let us know by writing to letters@crikey.com.au. Please include your full name to be considered for publication. We reserve the right to edit for length and clarity.

Thanks. This article has certainly informed me about Adobe’s AI feature and how it may have contributed to the Georgie Purcell image furore. Perhaps, for clarity, Adobe could name its AI function ‘common prejudice & bias enhancement’? Maybe that’s how most or all AI should be viewed.

So, not even AI can find Dutton’s eyebrows, or the faintest trace of human expression.

But AI gives poor Peter puffy, artificial-looking fingers.

Hi Cam, I added this to my comment on yesterday’s article on the subject but it’s just as relevant here:

Photoshop isn’t “adding skimpy outfits to women”, it’ just choosing what sort of image is likely to best fit, using some AI to measure and trim art and styles from it’s training corpus.

Adobe make a selling point of this – it’s training corpus is the absolutely ginormous Adobe Stock Photography library, and they use it to address the copyright issues text systems like ChatGPT are up against. If your work is in the Adobe Stock library then you’ve agreed to allow it to be used to train Adobe Firefly AI. And since it’s a stock photography library, used for advertising, marketing and content creation, then it skews *away* from a documentary slice of life and towards to what most appeals in the advertising and marketing sectors. We knew this before we ever fired up Firefly and its generative art, and it’s a limit that (for the most part) we’re happy to live with. Whether it’s right that advertisers and marketers prefer women in skimpy outfits is another debate, but I’d note that most advertisers will choose imagery that sells the most product.

It is not a perfect system. If you have access to Photoshop, or one of your colleagues does, ask it to generate “echidna” and see what eldritch horrors it comes up with 🙂

I thought Cam explained it well, the generative AI is propagating sexist stereotypes based on it’s (sexist) training set. I don’t see him claim that it’s directly “adding skimpy outfits to women”, thats just a side effect. It’s amazing technology as you say. I fear what would happen with first nation people!

Also, I’d like to thank Cam for not including the Hanson image, but I”m disappointed not to see Adam in Abbott style speedos, and I think Dutton may also have trigger the breast augmentation routine.

I wonder what triggered the censor…

I thought Cam explained it well, the generative AI is propagating sexist stereotypes based on it’s (sexist) training set. I don’t see him claim that it’s directly “adding skimpy outfits to women”, that’s just a side effect. It’s amazing technology as you say. I fear what would happen with first nation people!

Also, I’d like to thank Cam for not including the Hanson image, but I”m disappointed not to see Adam in Abbott style speedos, and I think Duts may also have trigger the chest augmentation routine.

I thought Cam explained it well, the generative AI is propagating misogynistic stereotypes based on it’s training set. I don’t see him claim that it’s directly “adding skimpy outfits to women”, that’s just a side effect. It’s amazing technology as you say. I fear what would happen with first nation people!

Also, I’d like to thank Cam for not including the Hansn image, but I”m disappointed not to see Adam in Tony style speedos, and I think Dutts may also have trigger the chest augmentation routine.

Yes this is a classic example of a journalist learning something new for a story and then thinking they’re expert.

Human beings at Nine are 100% responsible for this. Stop blaming your tools!

It was nothing like ‘a classic example of a journalist learning something new for a story and then thinking they’re expert’. It was a journalist conducting a trial and presenting the results with enough detail to make it repeatable, before drawing conclusions from his results — with appropriate caveats about the sample size and so on. Pretty good journalism.

And the people who actually use the tools on a regularly basis contradict them….

As a general journalists are experts at nothing.

Do you ever read a newspaper article about your area of expertise?

Exactly. It’s never pretty.

PS Do you actually disagree with my conclusion?

“Human beings at Nine are 100% responsible for this. Stop blaming your tools!”

Journalists (and people generally) are also terrible at identifying the things we have control over and the things we don’t.

We need to get better at that before AI becomes more of a thing.

Otherwise ‘the machine made me do it’ will become the new “I was just following orders’.

If you are referring to Michael Priest’s comment, it does not do what you say. It does not contradict the article, it clearly adds a comment and a point of view. Nothing in it says any part of the trial or test described in the article is incorrect, although it offers an alternative opinion. Which is fine, and helps the conversation. Unlike you, charging in to condemn the whole affair and insult the author. One wonders why exactly you are so excitable and so cross.

You bleat about Cam Wilson claiming to be an expert on Photoshop AI, but he has done no such thing. This is all in your head.

You want to know if I disagree with the conclusion of your initial comment. It is obvious I disagree with the first sentence. As for the remaining two, your view is fair enough; blame can hardly be apportioned to an unconscious object or tool. But then I don’t see that Cam Wilson has written anything that attempts to do that, so I fail to see why you adopt that accusing tone. His article is about what happens when Adobe’s AI is used in a particular way. Nothing in that can or could change the reponsibility of those that use it.

And one wonders why you are so excitable and so cross about my comment!

Maybe it’s an internet thing. I’m sure we’d get in person.

That’s the whole point. He used it in a certain way with a clear agenda (in case you missed it the current media obsession is “killer AI on the loose” (I’m exaggerating)). It doesn’t help that the media is particularly worried about being replaced by the tech giants (a concern I share).

This article is no different than Larry from The Today Show trying out the latest travelator.

Hey Michael, nice again to chat with you.

Disagree it that it isn’t adding outfits to people. I’m not saying it’s consciously doing it, but it is doing it.

I understand that Adobe’s model’s various quirks (and potential biases) is something you’re aware of. The point is that many people are not aware of that. I don’t think just hoping that users remember will work any more than hoping people wouldn’t use ChatGPT in high stakes situations like legal battles.

Always grateful to hear your take!

Cam, I also added this response to yesterdays article and it’s also relevant:

Hi Cam, you’re correct in that Photoshop can and will generate such outfits, and yes, using no prompts and allowing the system to come up with variants will produce a range of responses that can include revealing imagery, but

a. it will generate 3 options to choose from – an *operator* makes the choice of which to use or or to scrap all three and try again if nothing suitable emerges, not Photoshop, and

b. generative fill only creates new imagery on the blank part of the canvas and the fringe area connecting the existing image with the newly generated stuff. It does NOT alter non-adjacent areas of the existing image to increase a bust size, for example.

Wow. Interesting experiment and even more interesting results. One problem with AI is that it’s only as good as the people who create it. It has no intelligence of its own, but rather perpetuates and enhances human biases.

So if the top half of a figure has unusual features – like piercings, heavy tattoos, prominent injuries – it will extrapolate from those features in selecting what to include and exclude in the bottom half. It draws on patterns or stereotypes and it’s up to the human editor as to whether they accept or reject the output.

The patterns or stereotypes were based on images captured originally and in their millions by humans

Which is exactly why it could be so very dangerous in the coming years. Especially if used militarily.